An Era Beyond Centralized Cloud

The age of centralized cloud computing is yielding to a new frontier. At Microsoft Ignite 2024, CEO Satya Nadella unveiled Armada as the linchpin of Azure’s adaptive cloud, demonstrating its power to run remote industrial operations seamlessly, even with Starlink backhaul. This wasn't a casual spotlight on a nascent startup; it was a profound recognition that Armada’s solution addresses a global challenge with unprecedented scalability, setting the stage for a distributed future.

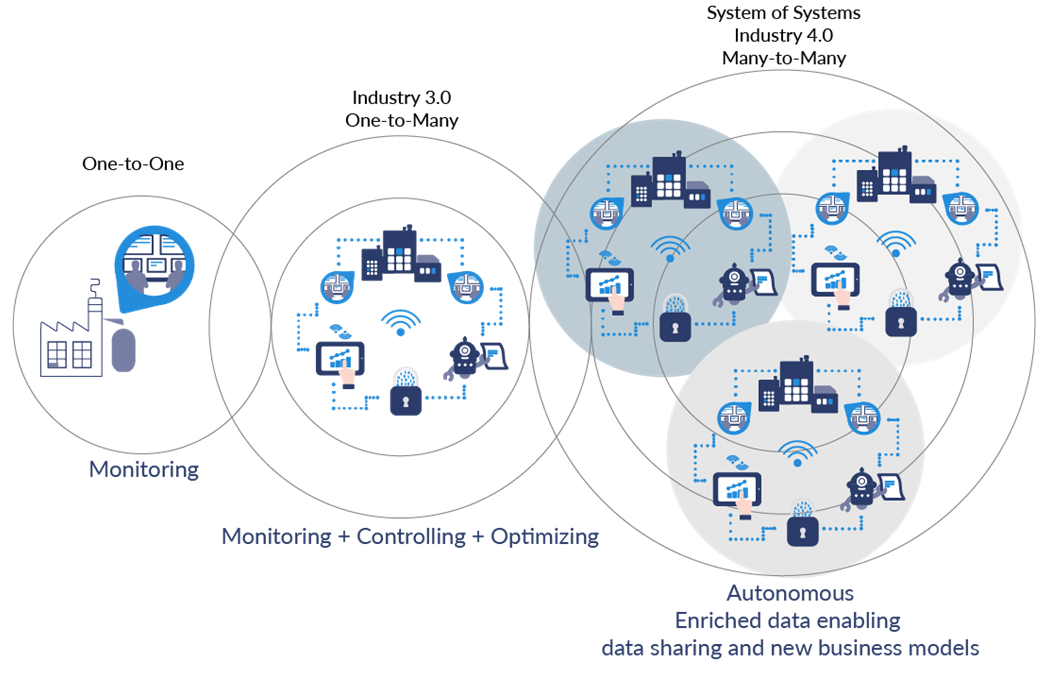

This is a challenge stemming from the era marked by rapid technological advancement and automation, known as the fourth industrial revolution. Driven by an explosion in connected devices and Internet of Things (IoT), businesses in Industry 4.0 are fueled with data from an installed base that could exceed 40 billion connected devices by 2030. Gartner also estimates that 75 percent of all enterprise data is being generated at the edge. When harnessed effectively, this data helps predict trends, manage inventories, smooth supply chains, and accelerate commerce globally. The promise was simple: connect everything and the business will see everything, and actionable intelligence could be managed from a smartphone, anywhere.

That promise collided with the shape and location of the data. IDC estimates that roughly 80 percent of global data will be unstructured, while about 90 percent of unstructured data goes unused. Video, imagery, and dense time series dominate at the edge. Mines, offshore platforms, vessels at sea, rail yards, remote warehouses, and distributed retail footprints generate far more data than their links can carry. Much of the planet still lacks basic connectivity—Northern Brazil, West Africa, parts of the Middle East, the Australian outback, and large rural regions across the United States and Canada. Traditional terrestrial network infrastructure is expensive, slow to deploy, and infeasible in many geographies. Unless customers can process data in real time and act on it, it serves only for retrospective analysis, and not as a day-to-day tool to help drive critical business decisions at every step. What good is data captured on an oil rig if the time it takes to process that data in a distant data center renders it irrelevant for preventing a disaster?

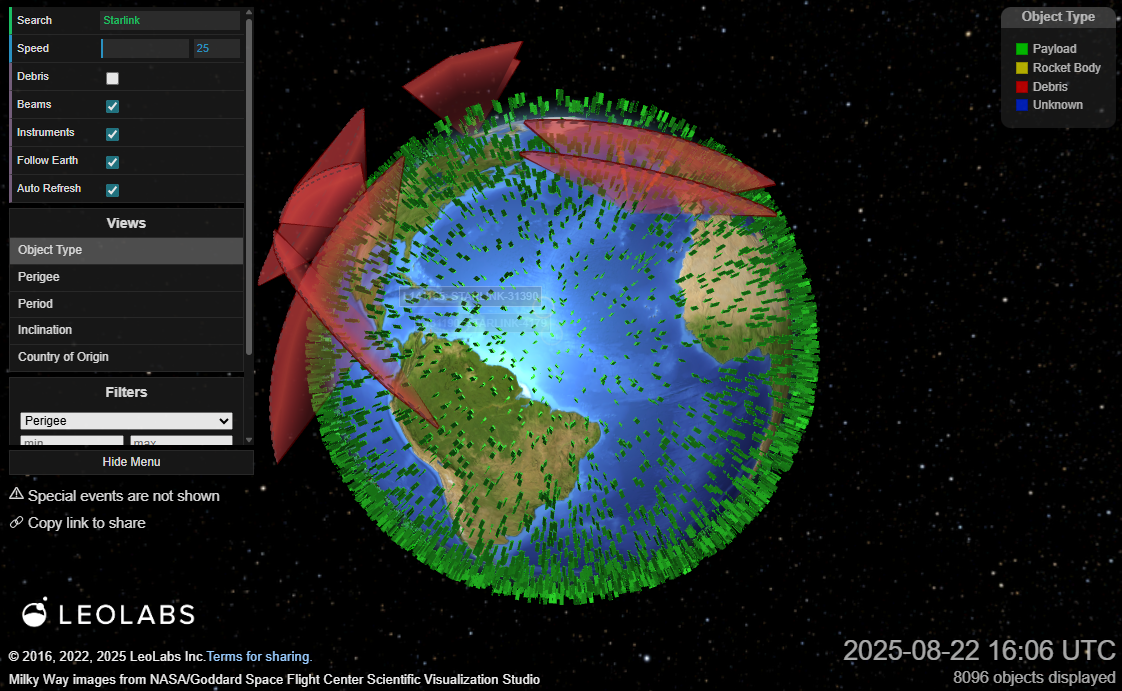

This bottleneck converged with the emergence of low Earth orbit (LEO) Satellite Communications (SatCom) internet services. Led by SpaceX’s Starlink, a new generation of SatCom is fundamentally reshaping that equation. With over 8,000 satellites already in orbit and a planned constellation of 12,000, Starlink delivers internet from LEO. This closer orbital altitude significantly reduces signal latency compared to legacy geostationary systems, enabling dramatically faster speeds and far lower latency, making high-performance connection possible even in the most isolated environments. Advanced technologies like phased array antennas, which dynamically adjust their beams for optimal satellite communication, and mesh networking with inter-satellite laser links, which optimize data routing and reduce reliance on ground stations, further maximize bandwidth and minimize interference.

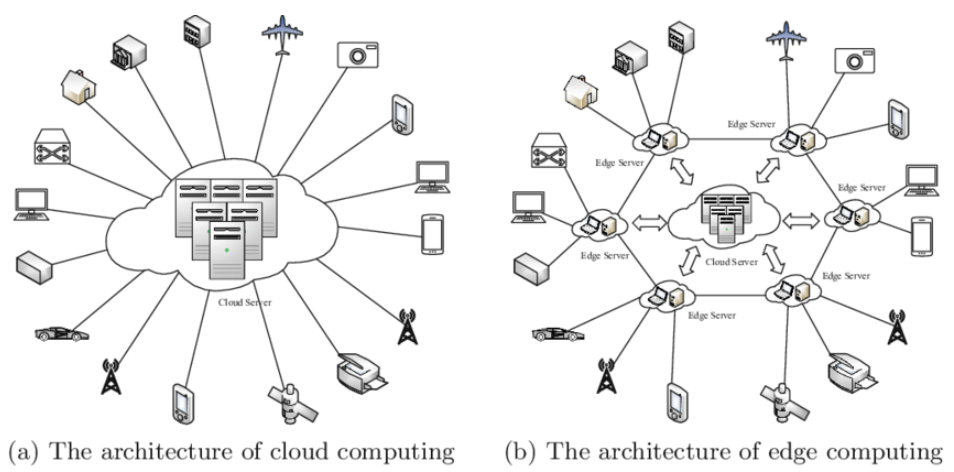

Aside from connectivity, it also exposes the deeper limitations of traditional cloud computing. What began with John McCarthy’s 1955 vision of shared computing power evolved into centralized data centers and, eventually, on-demand cloud infrastructure that reshaped how software and services were delivered. But today, five providers control a staggering 80 percent of the market, costs have soared, and many regions remain underserved. While the cloud transformed sectors like social media and SaaS, many industrial operations have been left behind. Because hyperscale data centers require massive land, capital, and connectivity, providers concentrate near major metros. Many organizations were drawn in by the initial convenience and low entry cost of the cloud, only to find themselves locked into centralized systems with limited flexibility to scale down. Simply put, the current cloud was never designed to extend this far because the existing cloud providers built an infrastructure for the world they knew, not for a world that is constantly evolving and growing before us.

All this demand for decentralization prompted a fundamental rethink of how infrastructure is designed. The breakthroughs in edge datacenters present a compelling opportunity to bridge the digital divide by putting compute at its data source. Instead of dragging everything back to centralized cloud regions, value now comes from processing data locally. Keeping computation close to the source also strengthens privacy, security, and regulatory compliance by reducing unnecessary data transfer and exposure. And because only the most relevant signals are sent upstream, network load and bandwidth costs are significantly reduced. Edge data centers make this possible by offering smaller footprints and modular designs purpose-built for time-sensitive processing, filtering, and inference. They are capable of running autonomously even when backhaul is disrupted, and synchronize with core systems when connectivity resumes. Rather than functioning as distant endpoints, they act as local execution environments, closing the loop between data and decision.

This shift is especially crucial for applications powered by AI and machine learning, where minutes, or even seconds, can determine outcomes. Computer vision for safety and loss prevention, time-series forecasting for maintenance and production, anomaly detection for quality and energy, and optimization for routing and staffing all depend on fresh local signals. A grocery chain prevents spoilage only if a temperature alert becomes an instruction within minutes, not hours. A mine avoids unplanned downtime only if vibration patterns are evaluated continuously and tied to maintenance work orders while equipment is still running. A port improves throughput only if berth cameras, yard sensors, and gate events resolve to a live model that coordinates people and assets in real time. In transportation, the United States alone experiences on the order of three train derailments a day; the difference between an alert and an after-action report is often measured in minutes. Miners still face gas, heat, and flooding risks where warnings arrive late. Military personnel often operate in communications-limited environments where decisions lean more on judgment than timely data.

All of this brings the industry to a practical inflection point. As connectivity and compute at the edge begin to reach levels previously unattainable, the longstanding cloud-first model gives way to distributed intelligence. Sites no longer function as disconnected outposts but as fully integrated, autonomous regions. This is the baseline for global, real-time operations and the foundation for scaling AI across the physical world.

Operationalizing Enterprise Edge with SatCom

Armada was founded to operationalize this distributed intelligence with reliability and scalability across disconnected environments around the world. While Starlink has dramatically expanded bandwidth availability in remote areas, access alone doesn’t make a system enterprise-ready. Starlink was primarily designed for individuals and small teams, not fleets of mission-critical sites that demand continuous uptime, centralized visibility, and standardized SLAs. There’s no unified framework for provisioning, managing, or supporting terminals at scale. Enterprise control is largely absent. For businesses that can’t afford ambiguity in network reliability, this is a gating concern. Past efforts to build edge data centers also fell short. Many were too expensive to deploy broadly, too fragile for harsh conditions, or too narrow in scope—tied to single workloads or fixed locations. They lacked lifecycle tooling, integration with modern networks, and the flexibility to scale with demand.

Armada’s approach closes these gaps with a full-stack distributed edge cloud: compute and storage positioned near where data is generated, managed centrally across fleets of remote sites. The system combines proprietary hardware built for the field, orchestration at enterprise scale, and software designed for real operating conditions. It integrates Starlink and other network paths into a unified fabric, deploys rugged modular data centers that run AI and analytics locally, and provides a control plane that governs the entire footprint. The containerized compute units can be shipped, installed, and brought online quickly, with power, cooling, and access engineered for harsh industrial environments and capacity scaled to the workload. On site, the software stack processes unstructured sensor data into actionable decisions. Models run locally, so alerts, detections, and optimizations execute immediately rather than waiting for a cloud round trip. When connectivity is available, the system synchronizes state, applies updates, and transmits only the data that matters upstream.

Security is central to its design. Enterprises are increasingly wary of relying on a single vendor’s security model, especially when tied to centralized infrastructure. By processing and storing data closer to its source, Armada reduces exposure from unnecessary movement and narrows the attack surface. Policies for retention, redaction, and access can be enforced locally and audited centrally. No system eliminates risk entirely, but distributed compute enables layered defenses and clearer compliance paths across energy, transportation, retail, maritime, and defense. Sustainability is another pressing factor. Hyperscale data centers demand ever more power and water, with global consumption projected to exceed 20 percent of electricity supply by 2030. In Ireland, they already use more energy than the rural population. Google’s average water use per facility approaches 450,000 gallons a day. Efficiency gains have not kept pace with exponential growth. Without structural change, the model is untenable. Armada’s distributed approach eases the total bandwidth demand and reduces strain on centralized facilities, while lowering overall energy and resource use.

The company’s leadership reflects the caliber required for global operational maturity. CEO Dan Wright spent a decade turning complex platforms into enterprise standards at AppDynamics and later at DataRobot. COO Jon Runyan guided Okta through IPO and global expansion, building the contracting and compliance systems that turn pilots into platforms. Founding CTO Pradeep Nair, who led Azure’s edge and infrastructure groups, designed the control plane for resilience under real-world conditions. Chief AI Officer Prag Mishra, brings nearly two decades at Amazon and Microsoft, where he developed world-class expertise in computer vision, robotics, and machine learning. He now ensures that raw sensor data is transformed into operational decisions rather than remaining lab outputs. Together, they lead a team of more than 300, bringing engineering rigor, operational scale, and cutting-edge applied AI capabilities that far outpace other edge efforts.

Reinventing the Architecture of a Unified Ecosystem

Armada is a platform, not a point product. At its foundation is the Armada Edge Platform (AEP), a control plane that lets central teams provision connectivity, roll out software, enforce policy, and monitor fleets of remote sites as one system. Built on AEP are the company’s two core pillars—Atlas, the software and orchestration layer with its integrated marketplace that delivers first- and third-party applications without requiring complex systems integration, and Galleon and Leviathan, the rugged data center infrastructure that delivers compute and AI at scale.

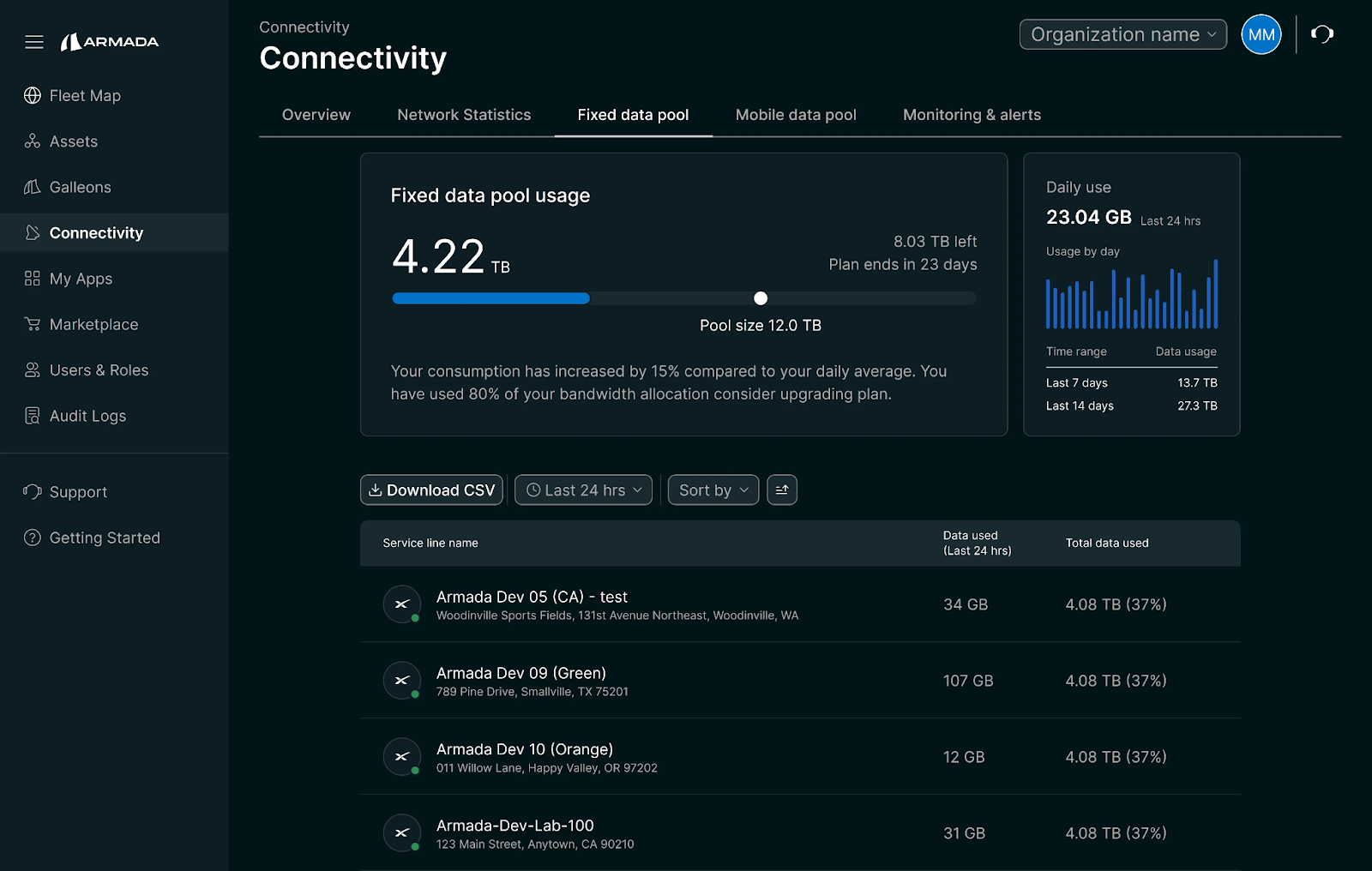

Atlas is the operational brain. It turns a fleet of remote assets into a manageable network. Starlink terminals and SD-WAN routers can be governed from a single console; data plans are pooled across terminals; traffic is shaped with QoS and bonded across satellite, LTE/5G, and terrestrial links. Performance and health are visible in real time. Enterprise features are standard: SSO with Entra/Okta/Google, role-based access controls, detailed audit logs, and a SOC 2/ISO 27001 security posture, with procurement available through Azure Marketplace. Atlas also extends into workloads, with dedicated modules for drone tracking and media management, long-horizon analytics for usage and reliability, and an AI Assist co-pilot for diagnostics, capacity planning, and predictive maintenance. Armada Marketplace is how software and devices flow to the edge without friction. Customers can deploy Armada’s own OpsAI applications, such as OpsSafety, Armada’s first-party AI application for real-time safety and incident prevention, which ingests camera and drone feeds to deliver live alerts and searchable context, from PPE compliance and restricted-zone breaches to fire detection and slip/fall risks. Other select partner offerings include SCADA or hyperspectral analytics, or ways to bring their own containerized services and push them to target sites in a few clicks. Connected assets—cameras, sensors, or terminals—can also be purchased and shipped pre-registered to AEP for zero-touch onboarding, enabling fleets to grow without creating operational sprawl.

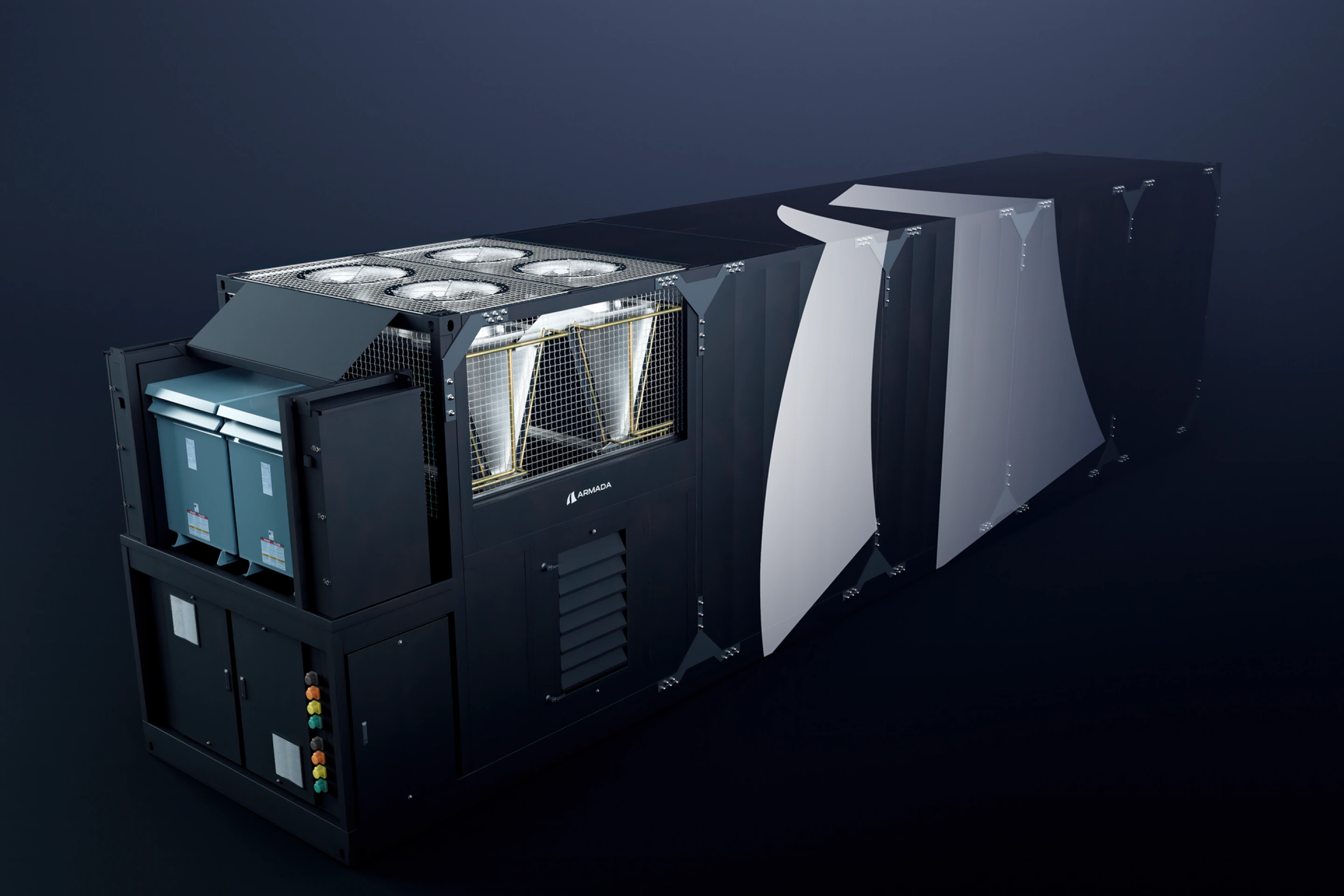

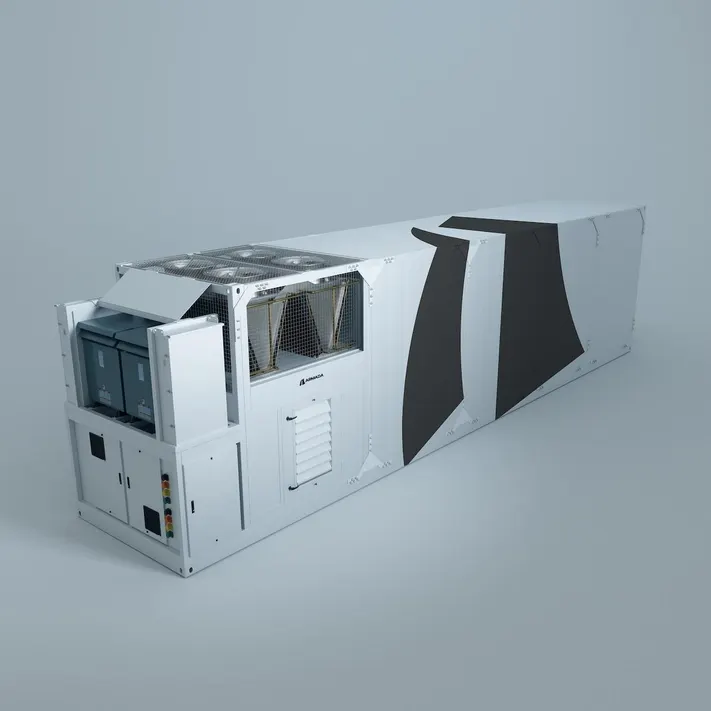

Galleon is Armada’s line of rugged, containerized edge data centers. Each unit comes pre-integrated with power, cooling, networking, storage, and accelerators, so AI and analytics workloads can run immediately after deployment. Form factors are designed for different operating environments: the Cruiser for space-constrained sites, and the larger Triton, a 40′ enclosure with five racks, for heavier workloads. All Galleons are engineered for harsh conditions with environmental sensors and on-unit video, and they automatically enroll into AEP. From there, Commander (within AEP) handles software rollout, monitoring, and lifecycle management, so sites as varied as a mine, port, or offshore platform are operated the same way without bespoke scripts.

Leviathan extends the Galleon concept by over 10x to megawatt scale. It is a relocatable, liquid-cooled AI/HPC data center that delivers ten times the compute capacity of Triton, enabling large-scale training and inference to be deployed in weeks instead of years. Because Leviathan is orchestrated through AEP, workload placement, updates, observability, and policy all follow the same model as Galleon.

Taken together, these individual product suites function as one synchronized system. Atlas makes fleets governable, action intelligence like OpsSafety turns raw feeds into timely action, while other ecosystem features in the marketplace keeps workloads and hardware flowing through a single, controlled channel. Galleon delivers turnkey compute in the field, and now Leviathan brings that model to megawatt scale. It is the practical stack for running real operations in places conventional cloud infrastructure could never reach.

Marching Towards a Fully Connected World

In its first full year in the market, Armada reported record growth with customers across oil and gas, mining, telecom, hospitality, and government, including Targa Resources, Atlas Energy, SQM, Mars, Marriott, Vocus, Tampnet, the U.S. Navy, and Alaska’s Department of Transportation. Its platform has already scaled to managing thousands of Starlink terminals across more than sixty countries, transforming what was once a patchwork of one-off field installs into a single, governable network. Even purchasing and operations have been streamlined, with customers able to buy and manage through Azure Marketplace, embedding Armada into existing enterprise procurement and management workflows.

More recently, in Saudi Arabia, Aramco Digital—the technology arm of the world’s largest energy producer—has partnered with Microsoft and Armada to deploy Galleon units running Azure services directly on site. These containerized edge data centers are already processing safety and operations data in real time across energy facilities, where downtime translates into millions in losses and heightened safety risk. In defense, Second Front’s Frontier platform, used by the U.S. DoD to accelerate software accreditation and deployment, is now running on Azure Local inside Galleons, proving that applications built for the cloud can actually be deployed securely at the edge in contested environments, not just in demos.

Armada is scaling quickly on both the capital and deployment fronts. In July, the company closed a $131M strategic round to accelerate manufacturing and field rollouts, with backing from both financial and strategic investors. At the same time, Leviathan, newly revealed megawatt-scale data center already introduced alongside Galleon, has moved into early siting with energy partners in North Dakota, Texas, West Virginia, and Louisiana to place compute where surplus or low-cost power is abundant. The Marketplace is also gaining traction, now hosting third-party AI offerings such as Avathon’s prescriptive maintenance and computer vision applications, which can be pushed directly to remote industrial sites without costly integration projects.

Zooming out, the trajectory is unmistakable. As SatCom constellations densify, connecting even the most remote locations will become routine, while the devices waiting for this foundation are multiplying fast. Soon, thousands of humanoids will work on factory floors, robotic dogs will patrol industrial sites, drones will monitor pipelines and forests, fleets of autonomous vehicles will move goods in sync, surveillance systems will parse live video, and sensors will be embedded across machines, appliances, energy systems and other built environments. All of them will depend on real-time compute, connectivity, and orchestration at the edge.

This is the imminent future in which Armada thrives. The original promise of Industry 4.0 is finally within reach. Where past IoT deployments lacked the operating environment to make a difference, Armada combines SatCom, compute, and AI into edge infrastructure enterprises can finally depend on, giving them a defining advantage in how they streamline and scale their businesses. Space Capital invested early in Armada as a defining example of SatCom Applications, and we’ll continue to support the next wave of SatCom-native companies bringing compute and intelligence to the places where the global economy actually runs.